Build a Wikipedia chatbot with LangChain

Wikipedia is a top search result for search engines because it’s a trusted site. DataStax built WikiChat, a way to ask Wikipedia questions and get back natural language answers, using Next.js, LangChain, Vercel, OpenAI, Cohere, and Astra DB Serverless.

Astra DB Serverless can concurrently ingest any Wikipedia updates, reindex them, and make them available for users to query without any delay to rebuild indexes.

Objective

In this tutorial, you will build a chatbot with the 1,000 most popular Wikipedia pages and use Wikipedia’s real-time updates feed to update its store of information. Similar to many retrieval-augmented generation (RAG) applications, you can build and deploy a Wikipedia-based chatbot with two parts:

- Data ingest

-

For data ingest, WikiChat includes a list of sources to scrape. WikiChat uses LangChain to chunk the text data, Cohere to create the embeddings, and then Astra DB Serverless to store all of this data.

- Web app

-

For the conversational tone, WikiChat is built on Next.js, Vercel’s AI library, Cohere, and OpenAI. When a user asks a question, we use Cohere to create the embeddings for that question, query Astra DB Serverless using vector search, and then feed those results into OpenAI to create a conversational response for the user.

Prerequisites

To complete this tutorial, you’ll need the following:

-

An active Astra account

-

A paid Cohere account

-

A paid OpenAI account

You should also be proficient in the following tasks:

-

Interacting with databases.

-

Running a basic Python script.

-

Entering parameters in a user interface for Vercel.

Clone the Git repository

Clone the chatbot Git repository and switch to that directory.

git clone https://github.com/datastax/wikichat.git

cd wikichatCreate your Serverless (Vector) database

-

In the Astra Portal, select Databases in the main navigation.

-

Select Create Database.

-

In the Create Database dialog, select the Serverless (Vector) deployment type.

-

In Configuration, enter a meaningful Database name.

You can’t change database names. Make sure the name is human-readable and meaningful. Database names must start and end with an alphanumeric character, and can contain the following special characters:

& + - _ ( ) < > . , @. -

Select your preferred Provider and Region.

You can select from a limited number of regions if you’re on the Free plan. Regions with a lock icon require that you upgrade to a Pay As You Go plan.

-

Click Create Database.

You are redirected to your new database’s Overview screen. Your database starts in Pending status before transitioning to Initializing. You’ll receive a notification once your database is initialized.

Set your environment variables

-

In your

wikichatdirectory, copy the.env.examplefile at the root of the project to.env. -

Store the credentials and configuration information for the APIs to build this app in

.env.-

In your Astra DB Serverless database dashboard, copy the API Endpoint for your

ASTRA_DB_API_ENDPOINTenvironment variable. -

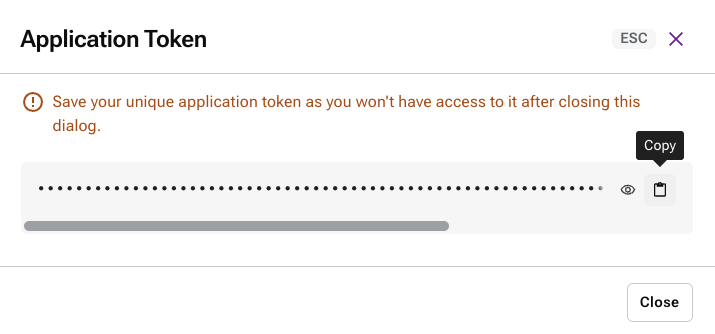

In your Astra DB Serverless database dashboard, click Generate Token. Copy the token for your

ASTRA_DB_APPLICATION_TOKENenvironment variable.

-

Set the

ASTRA_DB_NAMESPACEenvironment variable todefault_keyspace. -

In the OpenAI Platform, get your API key to use for your

OPENAI_API_KEYenvironment variable. -

In the Cohere Dashboard, get your API key to use for your

COHERE_API_KEYenvironment variable.

-

You should now have the following environment variables set in .env for your database:

ASTRA_DB_API_ENDPOINT=ENDPOINT

ASTRA_DB_APPLICATION_TOKEN=APPLICATION_TOKEN

ASTRA_DB_NAMESPACE=default_keyspace

OPENAI_API_KEY=OPENAI_API_KEY

COHERE_API_KEY=COHERE_API_KEYInstall the dependencies

-

In the project root directory, create a virtual environment.

python3 -m venv .venv -

Activate the environment for the current terminal session.

source .venv/bin/activate -

Install the project package and dependencies.

npm install pip3 install -r requirements.txt

Load the data

-

Run the

wiki_data.pywith theload-and-listenscript to ingest the articles and listen for changes.-

load-and-listen -

Results

python3 scripts/wiki_data.py load-and-listen2024-01-23 14:03:34.058 - INFO - root - unknown_worker - Running command load-and-listen with args LoadPipelineArgs(max_articles=2000, truncate_first=False, rotate_collections_every=100000, max_file_lines=0, file='scripts/data/wiki_links.txt') 2024-01-23 14:03:34.058 - INFO - root - unknown_worker - Starting... 2024-01-23 14:03:34.058 - INFO - root - unknown_worker - Reading links from file scripts/data/wiki_links.txt limit is 0 2024-01-23 14:03:34.059 - INFO - root - unknown_worker - Read 978 links from file scripts/data/wiki_links.txt 2024-01-23 14:03:34.060 - INFO - root - unknown_worker - Starting to listen for changes 2024-01-23 14:03:34.067 - INFO - root - unknown_worker - Processing: Total Time (h:mm:s): 0:00:02.189130 Report interval (s): 10 Wikipedia Listener: Total events: 0 (total) 0.0 (op/s) Canary events: 0 (total) 0.0 (op/s) Bot events: 0 (total) 0.0 (op/s) Skipped events: 0 (total) 0.0 (op/s) enwiki edits: 0 (total) 0.0 (op/s) Chunks: Chunks created: 0 (total) 0.0 (op/s) Chunk diff new: 0 (total) 0.0 (op/s) Chunk diff deleted: 0 (total) 0.0 (op/s) Chunk diff unchanged: 0 (total) 0.0 (op/s) Chunks vectorized: 0 (total) 0.0 (op/s) Database: Rotations: 0 (total) 0.0 (op/s) Chunks inserted: 0 (total) 0.0 (op/s) Chunks deleted: 0 (total) 0.0 (op/s) Chunk collisions: 0 (total) 0.0 (op/s) Articles read: 0 (total) 0.0 (op/s) Articles inserted: 0 (total) 0.0 (op/s) Pipeline: {'load_article': 968, 'chunk_article': 0, 'calc_chunk_diff': 0, 'vectorize_diff': 0, 'store_article_diff': 0} Errors: None Articles: Skipped - redirect: 0 (total) 0.0 (op/s) Skipped - zero vector: 0 (total) 0.0 (op/s) Recent URLs: None -

-

Open a new terminal and start a development server.

npm run dev -

Open http://localhost:3000 to view the chatbot in your browser.

Deploy your chatbot

You can deploy WikiChat to a serverless environment, such as Vercel.

-

In the Vercel Dashboard, search for and import the third-party Git repo from https://github.com/datastax/wikichat.

-

Select the Next.js Framework Preset option.

-

Set the Environment Variables to match the ones you defined above.

-

Click Deploy.

After a few minutes, you can see your deployed WikiChat app.

|

After you deploy in Vercel the first time, auto-deployment triggers for each subsequent commit. |

For more about using Vercel, see the Vercel documentation.

Conclusion

In this tutorial, you built a chatbot that can answer questions about the most popular and recently updated pages on Wikipedia. Here’s what you accomplished:

-

Loaded an initial dataset by scraping the 1,000 most popular Wikipedia articles.

-

Listened for real-time updates and only processed diffs.

-

Chunked text data using LangChain and generated embeddings using Cohere.

-

Stored application and vector data in Astra DB Serverless.

-

Built a web-based chatbot UI using Vercel AI SDK.

-

Performed vector search with Astra DB Serverless.

-

Generated accurate and context-aware responses using OpenAI.

You can now customize the code to scrape your own sites, change the prompts, and change the frontend to improve your users' experience.

See also

For more about the WikiChat project, see the following resources:

If you want to get hands-on experience with a Serverless (Vector) database as quickly as possible, begin with our Quickstart.

You can also read our intro to vector databases.