Build a recommendation system with vector search, LangChain, and OpenAI

Objective

A vector search runs to display similar products to the user. In this tutorial, you will build a simple product recommendation system. As products are added or updated, the embeddings in the database are automatically updated. Using a large language model (LLM) and vector search, you do not have to manually categorize the products. The embeddings determine similar products, reducing the effort to manage the recommendations.

Prerequisites

To complete this tutorial, you’ll need the following:

-

An active Astra account

-

An OpenAI account with a positive credit balance

You should also be proficient in the following tasks:

-

Interacting with databases.

-

Running a basic Python script.

-

Entering parameters in a user interface for Vercel.

Clone the Git repository

Clone the chatbot Git repository and switch to that directory.

git clone https://github.com/datastax/astra-db-recommendations-starter/

cd astra-db-recommendations-starterCreate your Serverless (Vector) database

-

In the Astra Portal, select Databases in the main navigation.

-

Select Create Database.

-

In the Create Database dialog, select the Serverless (Vector) deployment type.

-

In Configuration, enter a meaningful Database name.

You can’t change database names. Make sure the name is human-readable and meaningful. Database names must start and end with an alphanumeric character, and can contain the following special characters:

& + - _ ( ) < > . , @. -

Select your preferred Provider and Region.

You can select from a limited number of regions if you’re on the Free plan. Regions with a lock icon require that you upgrade to a Pay As You Go plan.

-

Click Create Database.

You are redirected to your new database’s Overview screen. Your database starts in Pending status before transitioning to Initializing. You’ll receive a notification once your database is initialized.

Set your environment variables

Export the following environment variables.

export ASTRA_DB_API_ENDPOINT=API_ENDPOINT # Your database API endpoint

export ASTRA_DB_APPLICATION_TOKEN=TOKEN # Your database application token

export OPENAI_API_KEY=API_KEY # Your OpenAI API key

export VECTOR_DIMENSION=1536Set up the code

-

Install project dependencies.

npm install pip3 install -r requirements.txt -

Load the data you will use in this tutorial.

python3 populate_db/load_data.py populate_db/product_data.csvOpenAI has rate limits, which could affect how effectively you can complete this tutorial. For more, see OpenAI’s rate limits.

For more about the embeddings, see About the tutorial.

Deploy your assistant locally

You can deploy the recommendations assistant to a local environment.

-

Start the backend server, which you installed as part of the requirements.

uvicorn api.index:app --reloadBecause this web server continues to run in the terminal, open a second terminal and export your environment variables again before the next step.

-

In a new terminal, with the same environment variables set, start the web server, which you installed as part of the requirements.

npm run dev -

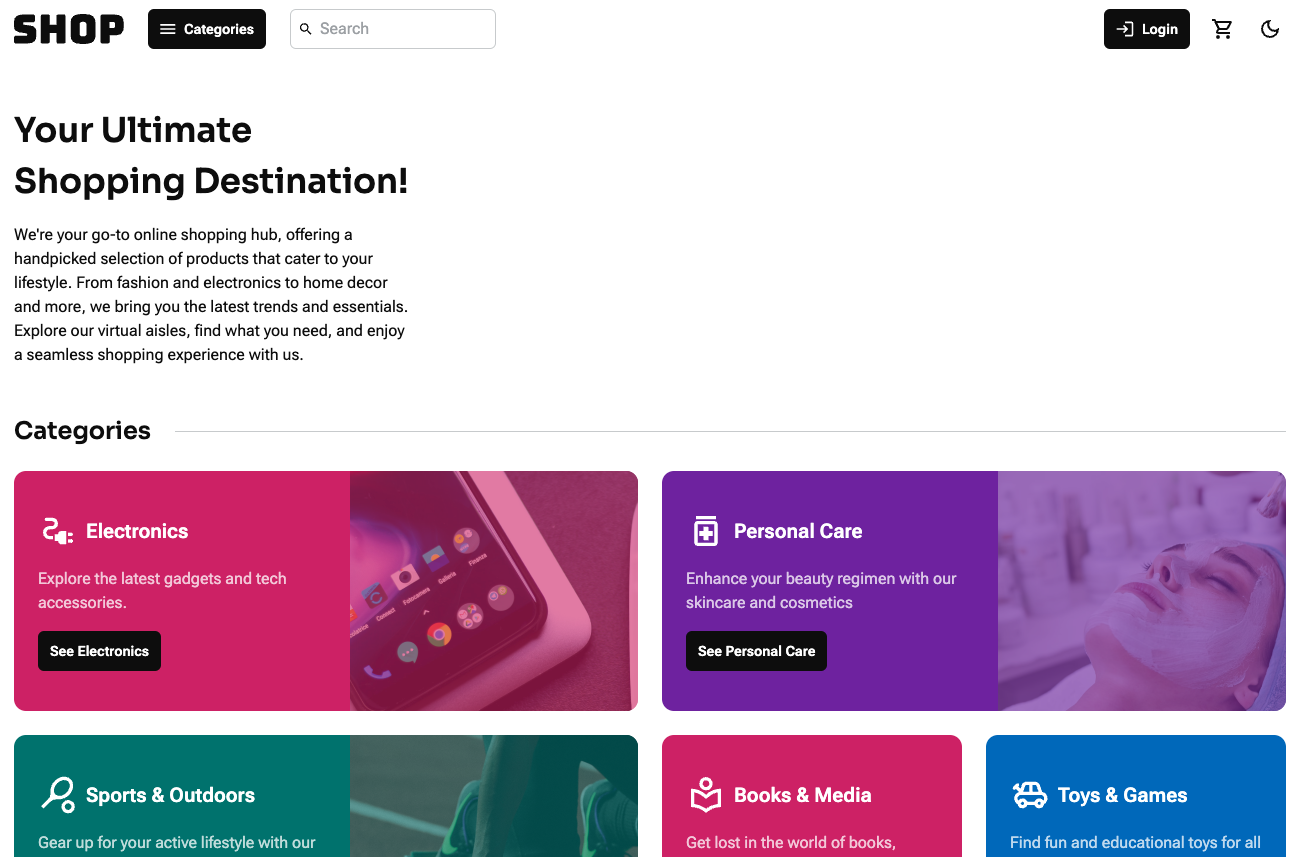

Open http://localhost:3000 to view the shop website with recommendations in your browser.

Deploy your assistant with Vercel

You can deploy the recommendations assistant to a serverless environment, such as Vercel.

-

In the Vercel Dashboard, search for and import the third-party Git repo from https://github.com/datastax/astra-db-recommendations-starter.

-

Select the Next.js Framework Preset option.

-

Set the Environment Variables to match the ones you defined above.

-

Click Deploy.

After a few minutes, you will see your deployed recommendations assistant.

|

After you deploy in Vercel the first time, auto-deployment will trigger for each subsequent commit. |

For more about using Vercel, see the Vercel documentation.

About the tutorial

Embeddings

The data for this tutorial come from a marketing sample produced by Amazon.

It contains 10,000 example Amazon products in csv format, which you can see in the /populate_db folder.

This tutorial loads each of these products into our Serverless (Vector) database alongside a vector embedding.

Each vector is generated from text data that’s extracted from each product.

The original csv file has 28 columns: uniq_id, product_name, brand_name, asin, category, upc_ean_code, list_price, selling_price, quantity, model_number, about_product, product_specification, technical_details, shipping_weight, product_dimensions, image, variants, sku, product_url, stock, product_details, dimensions, color, ingredients, direction_to_use, is_amazon_seller, size_quantity_variant, and product_description.

It is important to store all of this information in your database, but not all of it needs to be processed as text for generating recommendations. Our goal is to generate vectors that help identify related products by product name, description, and price. The image links and stock count are not as useful when finding similar products. First, transform the data by cutting it down to a few fields that are good comparisons:

data_file = load_csv_file(filepath)

for row in data_file:

to_embed = {key.lower().replace(" ","_"): row[key] for key in

("Product Name", "Brand Name", "Category", "Selling Price",

"About Product", "Product Url")}Product name, brand name, category, selling price, description, and URL are the most useful fields for identifying the product. Convert the resulting JSON object to a string. Embed that string using the OpenAI embedding model “text-embedding-ada-002”. This results in a vector of length 1536. Cast each value as a float because the special numpy float32 type cannot be converted into a string when using json.dumps().

def embed(text_to_embed):

embedding = list(embeddings.embed_query(text_to_embed))

return [float(component) for component in embedding]Add the returned vector to the full product JSON object and load it into the database collection.

to_insert["$vector"] = embedded_product

request_data = {}

request_data["insertOne"] = {"document": to_insert}

response = requests.request("POST", request_url,

headers=request_headers, data=json.dumps(request_data))You now have your vector product database, which you can use for product search and recommendations.

Get embeddings for search and recommendations

The user performs a search with the search bar at the top of the user interface. The search query is embedded and used for similarity search on your product database.

The code removes the vector field from the search results, because it’s not needed to populate the catalog. The front-end displays the remaining fields.

Because you stored the vectors in the database, you don’t need to generate embeddings every time you get recommendations. Simply take the current product and use its ID to extract the vector from the database.

Backend API

The API is split between a few files:

-

index.pycontains the actual FastAPI code. It defines an API with a single route:/api/query. Upon receipt of a POST request, the function calls thesend_to_openaifunction fromrecommender_utils.py. -

recommender_utils.pyloads authentication credentials fromlocal_credsand connects to your database.

Prompting to OpenAI with LangChain and FastAPI

recommender_utils.py sends the prompt to OpenAI.

The prompt template takes a search and relevant recommendations as parameters.

#prompt that is sent to OpenAI using the response from the vector database

prompt_boilerplate = "Of the following products, all preceded with

PRODUCT NUMBER, select the " + str(count) +

" products most recommended to shoppers who bought the product preceded by

ORIGINAL PRODUCT below. Return the product_id corresponding to those products."

original_product_section = "ORIGINAL PRODUCT: " +

json.dumps(strip_blank_fields(strip_for_query(get_product(product_id))))

comparable_products_section = "\n".join(string_product_list)

final_answer_boilerplate = "Final Answer: "

nl = "\n"

return (prompt_boilerplate + nl + original_product_section + nl +

comparable_products_section + nl + final_answer_boilerplate, long_product_list)These are the required functions:

-

strip_blank_fieldssearches for the most similar documents in the database. This guarantees that you pull information relevant to the products from the database. -

strip_for_querygets the product details based on the selected keys. It generates a prompt by passing the product details and user-provided query to the prompt template defined above. This prompt is sent to the GPT model for recommendations.

Conclusion

In this tutorial, you generated embeddings for Amazon products, stored those embeddings in a Serverless (Vector) database, and used prompt engineering to retrieve and display similar products based on a user’s search query. You also created an application to interact with this recommendation system.

You can now customize the code to add your own products, change the prompts, and change the frontend to improve your users' experience.

See also

If you want to get hands-on with a Serverless (Vector) database as quickly as possible, begin with our Quickstart.

You can also learn more with our intro to vector databases.