Build a chatbot with LangChain

Objective

In this tutorial, you will build a chatbot that can communicate with the FAQs from DataStax’s website. You will generate embeddings with LangChain and OpenAI, which the chatbot will use to find relevant content.

You’ll also create a simple React.js chatbot frontend that works with FastAPI and Vercel’s serverless functions to connect your frontend to AstraDB and the OpenAI Completions API.

Prerequisites

To complete this tutorial, you’ll need the following:

-

An active Astra account

-

A paid OpenAI account

You should also be proficient in the following tasks:

-

Interacting with databases.

-

Running a basic Python script.

-

Entering parameters in a user interface for Vercel.

Clone the Git repository

Clone the chatbot Git repository and switch to that directory.

git clone https://github.com/datastax/astra-db-chatbot-starter.git

cd astra-db-chatbot-starterCreate your Serverless (Vector) database

-

In the Astra Portal, select Databases in the main navigation.

-

Select Create Database.

-

In the Create Database dialog, select the Serverless (Vector) deployment type.

-

In Configuration, enter a meaningful Database name.

You can’t change database names. Make sure the name is human-readable and meaningful. Database names must start and end with an alphanumeric character, and can contain the following special characters:

& + - _ ( ) < > . , @. -

Select your preferred Provider and Region.

You can select from a limited number of regions if you’re on the Free plan. Regions with a lock icon require that you upgrade to a Pay As You Go plan.

-

Click Create Database.

You are redirected to your new database’s Overview screen. Your database starts in Pending status before transitioning to Initializing. You’ll receive a notification once your database is initialized.

Set your environment variables

-

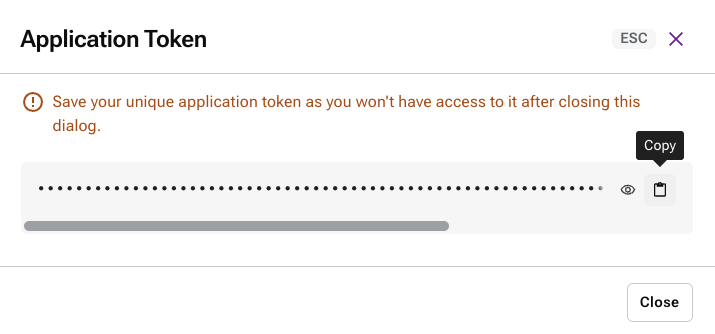

Click Generate Token. Copy the token for your

ASTRA_DB_APPLICATION_TOKENenvironment variable.

export ASTRA_DB_APPLICATION_TOKEN=TOKEN # Your database application token -

Set the

ASTRA_DB_NAMESPACEenvironment variable todefault_keyspace.export ASTRA_DB_NAMESPACE=default_keyspace # A namespace that exists in your database -

Set the

ASTRA_DB_COLLECTIONenvironment variable tocollection. Choose a different name if you already have a collection namedcollection.export ASTRA_DB_COLLECTION=collection # Your database collection -

In your Astra DB Serverless database dashboard, copy the API Endpoint for your

ASTRA_DB_API_ENDPOINTenvironment variable.export ASTRA_DB_API_ENDPOINT=API_ENDPOINT # Your database API endpoint -

In the OpenAI Platform, get your API key to use for your

OPENAI_API_KEYenvironment variable.export OPENAI_API_KEY=API_KEY # Your OpenAI API key -

Set

DIMENSIONSto the number of dimensions for your vector. This tutorial uses the OpenAI text-embedding-ada-002 model, which produces vectors with 1536 dimensions.export VECTOR_DIMENSION=1536 -

Set the

SCRAPED_FILEenvironment variable to the location where you want to store your scraped data.export SCRAPED_FILE=scrape/scraped_results.json

You should now have the following environment variables set for your database:

export ASTRA_DB_API_ENDPOINT=API_ENDPOINT # Your database API endpoint

export ASTRA_DB_APPLICATION_TOKEN=TOKEN # Your database application token

export ASTRA_DB_NAMESPACE=default_keyspace # A namespace that exists in your database

export ASTRA_DB_COLLECTION=collection # Your database collection

export OPENAI_API_KEY=API_KEY # Your OpenAI API key

export VECTOR_DIMENSION=1536

export SCRAPED_FILE=scrape/scraped_results.jsonSet up the code

-

Verify that pip is version 23.0 or higher.

pip --versionUpgrade pip if needed.

python -m pip install --upgrade pip -

Install Python dependencies and load the data you will use in this tutorial.

npm install pip install -r requirements.txtpython populate_db/create_collection.py -

Run the

load_data.pyscript to load the scraped website data that lives inscraped_results.json.python populate_db/load_data.pyOpenAI has rate limits, which could affect how effectively you can complete this tutorial. For more, see OpenAI’s rate limits.

scraped_results.jsonhas the following structure:scraped_results.json{ [ { "url": url_of_webpage_scraped_1, "title": title_of_webpage_scraped_1, "content": content_of_webpage_scraped_1 }, ... ] }For more about the embeddings, see About the tutorial.

Deploy your chatbot locally

-

Start the web server, which you installed as part of the requirements.

npm run devBecause this web server continues to run in the terminal, open a second terminal and export your environment variables again before the next step.

-

Test your API by sending it a request.

curl --request POST localhost:8000/api/chat \ --header 'Content-Type: application/json' \ --data '{"prompt": "Can encryptions keys be exchanged for a particular table?" }'The endpoint returns the following response:

"{\"text\": \"\\nNo, encryptions keys cannot be exchanged for a particular table. However, you can use the GRANT command to configure access to rows within the table. You can also create an entirely new keyspace with a different name if desired.\", \"url\": \"https://docs.datastax.com/en/dse68-security/docs/secFAQ.html\"}"This response is a string, so you may need to convert it to JSON.

-

Open http://localhost:3000 to view and test the chatbot in your browser.

Deploy your chatbot with Vercel

To see the chatbot in action, you can deploy it to a serverless environment, such as Vercel.

-

In the Vercel Dashboard, search for and import the third-party Git repo from https://github.com/datastax/astra-db-chatbot-starter.

-

Select the Next.js Framework Preset option.

-

Set the Environment Variables to match the ones you defined above.

-

Click Deploy.

After a few minutes, you will see your deployed chatbot app.

|

After you deploy in Vercel the first time, auto-deployment will trigger for each subsequent commit. |

For more about using Vercel, see the Vercel documentation.

About the tutorial

Embeddings

We define our embedding model to match the one used in the vectorization step. We use LangChain’s OpenAI library to create a connection to their API for completions as well as embeddings.

The load_data.py script loads the scraped website data that lives in scraped_results.json with the following structure:

{

[

{

"url": url_of_webpage_scraped_1,

"title": title_of_webpage_scraped_1,

"content": content_of_webpage_scraped_1

},

...

]

}|

If you’d like to learn more about how this data is scraped, you can find the scraper and instructions in the README. |

This is a portion of the text scraped from the Datastax Premium Support FAQ page:

input_json = {

"url": "https://www.datastax.com/services/support/premium-support/faq",

"title": "FAQ | DataStax Premium Support",

"content": (

"What is DataStax Premium Support?\n"

"Premium Support consists of three add-on offerings available to enhance your existing DataStax support experience\n"

"There are three Premium offerings currently available:\n"

"Premium Cloud Engineer\n"

"Named Engineer\n"

"Technical Account Manager\n"

"Can I purchase a Premium Support subscription for any DataStax product? \n"

"You can purchase Premium Support as an add-on to DataStax products that are generally available. Please contact PremiumSupport@datastax.com if you have any questions.\n"

"What is included in each of the different DataStax technical support offerings?"

# ...

)

}The text in input_json['content'] is split on the newline character.

The lines that are questions are stored in question_lines.

all_lines = [

0["What is DataStax Premium Support?"]

1["Premium Support consists of three add-on offerings available to enhance your existing DataStax support experience"]

2["There are three Premium offerings currently available:"]

3["Premium Cloud Engineer"]

4["Named Engineer"]

5["Technical Account Manager"]

6["Can I purchase a Premium Support subscription for any DataStax product?"]

7["You can purchase Premium Support as an add-on to DataStax products that are generally available. Please contact PremiumSupport@datastax.com if you have any questions."]

8["What is included in each of the different DataStax technical support offerings?"]

# ...

]

question_lines = [0, 6, 8] # ...There are questions on lines 0, 6, 8 … This means that the answers will be on lines 1 to 5, 7 …

Now the answer lines are joined into a list and the output data structure is defined:

q_and_a_output_data = {

"url": "https://www.datastax.com/services/support/premium-support/faq"

"title": "FAQ | DataStax Premium Support"

"questions": [

["What is DataStax Premium Support?"],

["Can I purchase a Premium Support subscription for any DataStax product?"],

# ...

]

"answers": [

[(

"Premium Support consists of three add-on offerings available to enhance your existing DataStax support experience\n"

"There are three Premium offerings currently available:\n"

"Premium Cloud Engineer\n"

"Named Engineer\n"

"Named Engineer\n"

"Technical Account Manager"

)],

[(

"You can purchase Premium Support as an add-on to DataStax products that are generally available. "

"Please contact PremiumSupport@datastax.com if you have any questions."

)]

# ...

]

}Once the text is split into answers and questions, each question and answer pair is iterated. The concatenated text of each question and answer is embedded with the OpenAI text-embedding-ada-002 model.

The first iteration through the question and answer data gives the following result:

question_id = 1

document_id = "https://www.datastax.com/services/support/premium-support/faq"

question = "What is DataStax Premium Support?"

answer = (

"Premium Support consists of three add-on offerings available to enhance your existing DataStax support experience\n"

"There are three Premium offerings currently available:\n"

"Premium Cloud Engineer\n"

"Named Engineer\n"

"Technical Account Manager"

)

# (The embedding is a 1 x 1536 vector that we've abbreviated)

embedding = [-0.0077333027, -0.02274981, -0.036987208, ..., 0.052548025]At each step, the data is pushed to the database:

to_insert = {

"insertOne": {

"document": {

"document_id": document_id,

"question_id": question_id,

"answer":answer,

"question":question,

"$vector":embedding

}

}

}

response = requests.request(

"POST",

request_url,

headers=request_headers,

data=json.dumps(to_insert)

)The collection is now populated with documents containing the question and answer data and the vector embeddings.

Backend API

The API is split between a few files:

-

index.pycontains the actual FastAPI code. It defines an API with a single route:/api/chat. Upon receipt of a POST request, the function calls thesend_to_openaifunction fromchatbot_utils.py. -

chatbot_utils.pyloads authentication credentials from your environment variables and connects to your database.

Conclusion

Congratulations! In this tutorial, you completed the following:

-

Created a Serverless (Vector) database.

-

Added data scraped from our FAQ pages.

-

Used the scraped data to add context to prompts to the OpenAI API.

-

Held a chat session with an LLM.

You can now customize the code to scrape your own sites, change the prompts, and change the frontend to improve your users' experience.

See also

To get hands-on with a Serverless (Vector) database as quickly as possible, begin with our Quickstart.

You can also read our intro to vector databases.