Export metrics via DevOps API

Enterprises depend on the ability to view database health metrics in centralized systems along with their other software metrics. The Astra DB Metrics feature lets you forward Astra DB database health metrics to an external third-party metrics system. We refer to the recipient of the exported metrics as the destination system.

You can configure the export of Astra DB metrics via the DevOps API (described in this topic), or via Astra Portal.

Benefits

The Astra DB Metrics feature allows you to take full control of forwarding Astra DB database health metrics to your preferred observability system. The functionality is intended for developers, site reliability engineers (SREs), IT managers, and product owners.

Ingesting database health metrics into your system gives you the ability to craft your own alerting actions and dashboards based on your service level objectives and retention requirements. While you can continue to view metrics displayed in Astra Portal via each database’s Health tab, forwarding metrics to a third-party app gives you a more complete view of all metrics being tracked, across all your products.

This enhanced capability can provide your team with broader insights into historical performance, issues, and areas for improvement.

|

The exported Astra DB health metrics are nearly real-time when consumed externally. You can find the source-of-truth view of your metric values in the Astra Portal’s Health dashboard. |

Prerequisites

-

If you haven’t already, create a serverless database using the Astra Portal.

Keep track of your

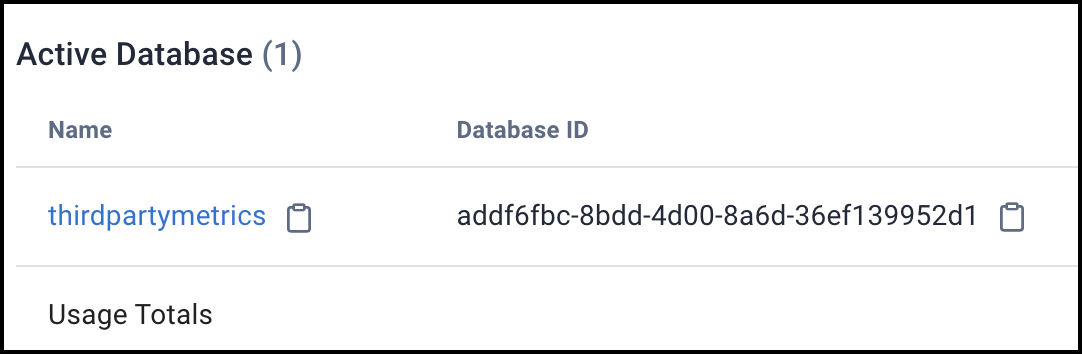

databaseId. You’ll specify it in the DevOpsPOSTAPI call for/v2/databases/{databaseId}/telemetry/metrics. You can find thedatabaseIdon the Astra Portal’s dashboard.Example:

-

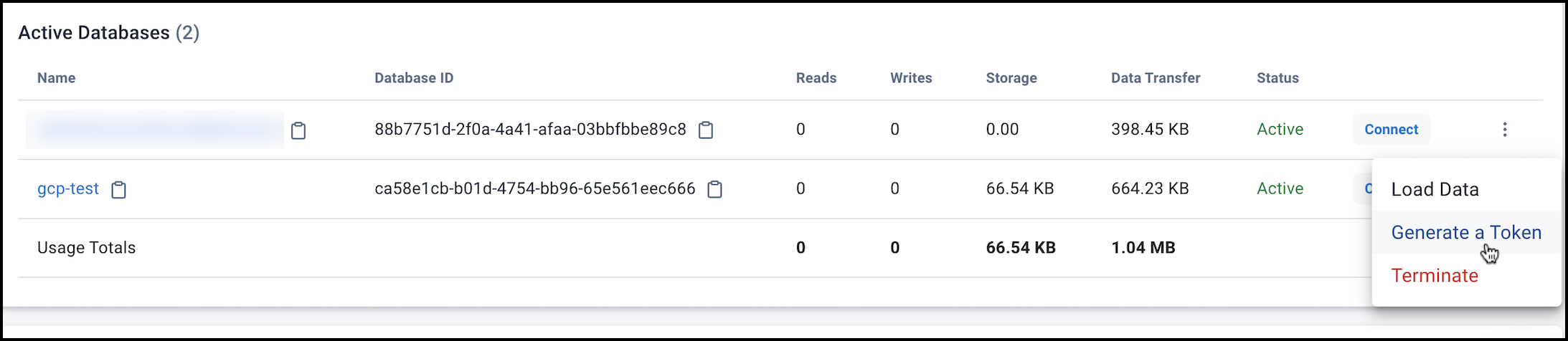

Generate an application token so you can authenticate your account in the DevOps API.

If you don’t have a current token, see Manage application tokens.

Example:

When using the DevOps API, pass in the auth token’s value in the call’s Header.

-

Ensure you have permission to use the DevOps v2 API for enabling third-party metrics. See Roles and permissions in this topic.

|

You’ll need an existing destination system to receive the forwarded Astra DB metrics. Supported destinations are Amazon CloudWatch, Apache Kafka, Confluent Kafka, Datadog, Prometheus, Pulsar/Streaming, and Splunk. You can also use Grafana / Grafana Cloud to visualize the exported metrics. |

Pricing

With an Astra DB PAYG or Enterprise plan, there is no additional cost to using Astra DB Metrics, outside of standard data transfer charges. Exporting third-party metrics is not available on the Astra DB Free Tier.

Metrics monitoring may incur costs at the destination system. Consult the destination system’s documentation for its pricing information.

Roles and permissions

The following Astra DB roles can export third-party metrics:

-

Organization Administrator (recommended)

-

Database Administrator

-

Service Account Administrator

-

User Administrator

The required db-manage-thirdpartymetrics permission is automatically assigned to those roles.

If you create a custom role in Astra DB, be sure to assign db-manage-thirdpartymetrics permission to the custom role.

Database health metrics forwarded by Astra DB

|

Metrics are not alerts. Astra DB provides the ability to export metrics to an external destination system so that you can devise alerts based on the metrics' values. Also, note that when you use the Astra DB Metrics feature in conjunction with the private link feature, the exported metrics traffic does not make use of the private link connection. Metrics traffic flows over the public interfaces as it would without a private link. |

The Astra DB health metrics forwarded are aggregated values, calculated once every 1 minute. For each metric, a rate of increase over both 1 minute and 5 minutes will be produced. The following database health metrics will be forwarded by the Astra DB Metrics feature:

-

astra_db_rate_limited_requests:rate1mandastra_db_rate_limited_requests:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for the number of failed operations due to an Astra DB rate limit. You can request that rate limits are increased for your Astra DB databases. Using these rates, alert if the value is > 0. -

astra_db_read_requests_failures:rate1mandastra_db_read_requests_failures:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for the number of failed reads. Cassandra drivers retry failed operations, but significant failures can be problematic. Using these rates, alert if the value is > 0.Warnalert on low amount.Highalert on larger amounts; determine potentially as a percentage of read throughput. -

astra_db_read_requests_timeouts:rate1mandastra_db_read_requests_timeouts:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for read timeouts. Timeouts happen when operations against the database take longer than the server side timeout. Using these rates, alert if the value is > 0. -

astra_db_read_requests_unavailables:rate1mandastra_db_read_requests_unavailables:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for reads where service is not available to complete a specific request. Using these rates, alert if the value is > 0. -

astra_db_write_requests_failures:rate1mandastra_db_write_requests_failures:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for the number of failed writes. Cassandra drivers retry failed operations, but significant failures can be problematic. Using these rates, alert if the value is > 0.Warnalert on low amount.Highalert on larger amounts; determine potentially as a percentage of read throughput. -

astra_db_write_requests_timeouts:rate1mandastra_db_write_requests_timeouts:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for timeouts, which occur when operations take longer than the server side timeout. Using these rates, compare withwrite_requests_failures. -

astra_db_write_requests_unavailables:rate1mandastra_db_write_requests_unavailables:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for unavailable errors, which occur when the service is not available to service a particular request. Using these rates, compare withwrite_requests_failures. -

astra_db_range_requests_failures:rate1mandastra_db_range_requests_failures:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for the number of range reads that failed. Cassandra drivers retry failed operations, but significant failures can be problematic. Using these rates, alert if the value is > 0.Warnalert on low amount.Highalert on larger amounts; determine potentially as a percentage of read throughput. -

astra_db_range_requests_timeouts:rate1mandastra_db_range_requests_timeouts:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for timeouts, which are a subset of total failures. Use this metric to understand if failures are due to timeouts. Using these rates, compare withrange_requests_failures. -

astra_db_range_requests_unavailables:rate1mandastra_db_range_requests_unavailables:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for unavailable errors, which are a subset of total failures. Use this metric to understand if failures are due to timeouts. Using these rates, compare withrange_requests_failures. -

astra_db_write_latency_seconds:rate1mandastra_db_write_latency_seconds:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for write throughput. Alert based on your application Service Level Objective (business requirement). -

astra_db_write_latency_seconds_P$QUANTILE:rate1mandastra_db_write_latency_seconds_P$QUANTILE:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for write latency, where $QUANTILE is a histogram quantile of 99, 95, 90, 75, or 50 (e.g.astra_db_write_latency_seconds_P99:rate1m). Alert based on your application Service Level Objective (business requirement). -

astra_db_write_requests_mutation_size_bytesP$QUANTILE:rate1mandastra_db_write_requests_mutation_size_bytesP$QUANTILE:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for how big writes are over time, where $QUANTILE is a histogram quantile of 99, 95, 90, 75, or 50. For example,astra_db_write_requests_mutation_size_bytesP99:rate1m. -

astra_db_read_latency_seconds:rate1mandastra_db_read_latency_seconds:rate5m- Take the rate for read throughput. Alert based on your application Service Level Objective (business requirement). -

astra_db_read_latency_secondsP$QUANTILE:rate1mandastra_db_read_latency_secondsP$QUANTILE:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for percentiles read for latency, where $QUANTILE is a histogram quantile of 99, 95, 90, 75, or 50. For example,astra_db_read_latency_secondsP99:rate1m. Alert based on your application Service Level Objective (business requirement). -

astra_db_range_latency_seconds:rate1mandastra_db_range_latency_seconds:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) for range read throughput. Alert based on your application Service Level Objective (business requirement). -

astra_db_range_latency_secondsP$QUANTILE:rate1mandastra_db_range_latency_secondsP$QUANTILE:rate5m- A calculated rate of change (over 1 minute and 5 minutes, respectively) of range read for latency, where $QUANTILE is a histogram quantile of 99, 95, 90, 75, or 50. For example,astra_db_range_latency_secondsP99. Alert based on your application Service Level Objective (business requirement).

Prometheus setup at the destination

For information about setting up Prometheus itself as the destination of the forwarded Astra DB database metrics, see the Prometheus Getting Started documentation.

|

|

For Prometheus,

|

After completing those steps in your Prometheus environment, verify it by sending a POST request to the remote write endpoint. Consider using the following example test client, which also verifies that ingress is setup properly:

Prometheus Remote Write Client (promremote), written in Go.

|

For more information about Prometheus metric types, see this topic on prometheus.io. |

Kafka setup at the destination

For information about setting up Kafka as a destination of the forwarded Astra DB database metrics, see:

-

Kafka metrics overview and Kafka Monitoring in the open-source Apache Kafka documentation.

-

Confluent Cloud Kafka documentation.

Amazon CloudWatch setup at the destination

In AWS, the secret key user must have the PutMetricData action defined as the minimum required permission. For example, in AWS Identity and Access Management (IAM) define a policy such as the following, and attach that policy to the user account that will receive the Astra DB exported metrics in CloudWatch.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "cloudwatch:PutMetricData",

"Resource": "*"

}

]

}For more, see:

Configure the POST payload

Use the following POST to export metrics to an external system:

POST /v2/databases/{databaseId}/telemetry/metrics|

The configuration payload (JSON) depends on which destination you use. Supported destinations are Prometheus, Apache Kafka, Confluent Kafka, Amazon CloudWatch, Splunk, Pulsar, and Datadog. |

To ensure metrics are enabled for your destination app, provide the relevant properties as described below.

|

Each |

See the following sections for curl examples. If you prefer, use Postman with raw JSON in the body.

In the --header, use Bearer and specify your token ID to authenticate with the DevOps v2 API.

If you don’t have a current token, see Manage application tokens.

Specify your database ID.

See the Astra Portal Dashboard for its value. You can define a variable such as $DB_ID, set it to your databaseId value, and then use the variable in a curl command.

In the request payload, specify the destination’s --data properties.

Astra DB Metrics configuration for Prometheus

With a required top-level key of prometheus_remote, the POST payload:

-

prometheus_remote-

endpoint -

auth_strategy -

token -

user -

password

-

For auth_strategy, specify basic or bearer, depending on your Prometheus remote_write auth type.

-

If you specified

"auth_strategy": "bearer", provide your Prometheus token. Do not includeuserorpasswordin the POST request payload. -

If you specified

"auth_strategy": "basic", provide your Prometheususerandpassword. Do not includetoken.

Example payloads:

{

"prometheus_remote": {

"endpoint": "https://prometheus.example.com/api/prom/push",

"auth_strategy" : "bearer",

"token" : "lSAYp9oLtdAa9ajasoNNS999"

}

}Or:

{

"prometheus_remote": {

"endpoint": "https://prometheus.example.com/api/prom/push",

"auth_strategy" : "basic",

"password" : "myPromPassword",

"user" : "myPromUsername"

}

}|

For Prometheus,

|

POST metrics configuration examples (Prometheus)

|

In the curl examples, notice we added the |

-

cURL command

-

Result

Example POST payload for Prometheus where auth_strategy is basic:

curl --request POST \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include \

--data '{

"prometheus_remote": {

"endpoint": "https://prometheus.example.com/api/prom/push",

"auth_strategy": "basic",

"user": "myPromUsername",

"password": "myPromPassword"

}

}'

Example POST payload for Prometheus where auth_strategy is bearer:

curl --request POST \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include \

--data '{

"prometheus_remote": {

"endpoint": "https://prometheus.example.com/api/prom/push",

"auth_strategy": "bearer",

"token": "lSAYp999999999999Aa9ajasoNNS999"

}

}'202 OK

Or one of the following:

400 Bad request.

401 Unauthorized.

403 The user is forbidden to perform the operation.

404 The specified resource was not found.

409 The request could not be processed because of conflict.

5XX A server error occurred.

Example:

{

"errors": [

{

"description": "The name of the environment must be provided",

"internalCode": "a1012",

"internalTxId": "103B-A018-3898-0ABF"

}

]

}Get metrics configuration examples (Prometheus)

|

In the curl examples, notice we added the |

Retrieve third-party metrics configuration for an Astra DB database:

-

cURL command

-

Result

curl --request GET \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include200 OK

Example response for Prometheus:

{

"prometheus_remote": {

"endpoint": "https://prometheus.example.com/api/prom/push",

"auth_strategy": "basic",

"user": "myPromUsername",

"password": "myPromPassword"

}

}

Or one of the following:

400 Bad request.

403 The user is forbidden to perform the operation.

404 The specified resource was not found.

500 A server error occurred.

{

"errors": [

{

"description": "The name of the environment must be provided",

"internalCode": "a1012",

"internalTxId": "103B-A018-3898-0ABF"

}

]

}Astra DB Metrics configuration for Kafka

With a required top-level key of kafka, the POST payload’s required properties are:

-

bootstrap_servers -

topic -

sasl_mechanism -

sasl_username -

sasl_password

Example payload for Kafka:

{

"kafka": {

"bootstrap_servers": [

"pkc-9999e.us-east-1.aws.confluent.cloud:9092"

],

"topic": "astra_metrics_events",

"sasl_mechanism": "PLAIN",

"sasl_username": "9AAAAALPRC9AAAAA",

"sasl_password": "viAAr/geQxxacrAAmydHb7wz6DRu6mL9W9999juQcS1s++pECM99mnW+3Gs06xDd",

"security_protocol": "SASL_PLAINTEXT"

}

}|

The

Be sure to specify the appropriate, related |

POST metrics configuration example (Kafka)

|

In the curl examples, notice we added the |

-

cURL command

-

Result

Example POST payload for Kafka:

curl --request POST \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include \

--data '{

"kafka": {

"bootstrap_servers": [

"kafka-0.yourdomain.com:9092"

],

"topic": "astra_metrics_events",

"sasl_mechanism": "PLAIN",

"sasl_username": "kafkauser",

"sasl_password": "kafkapassword",

"security_protocol": "SASL_PLAINTEXT"

}

}'202 OK

Or one of the following:

400 Bad request.

401 Unauthorized.

403 The user is forbidden to perform the operation.

404 The specified resource was not found.

409 The request could not be processed because of conflict.

5XX A server error occurred.

Example:

{

"errors": [

{

"description": "The name of the environment must be provided",

"internalCode": "a1012",

"internalTxId": "103B-A018-3898-0ABF"

}

]

}Get metrics configuration examples (Kafka)

|

In the curl examples, notice we added the |

Retrieve third-party metrics configuration for an Astra DB database:

-

cURL command

-

Result

curl --request GET \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include200 OK

Example response for Kafka:

{

"kafka": {

"bootstrap_servers": [

"kafka-0.yourdomain.com:9092"

],

"topic": "astra_metrics_events",

"sasl_mechanism": "PLAIN",

"sasl_username": "kafkauser",

"sasl_password": "kafkapassword",

"security_protocol": "SASL_PLAINTEXT"

}

}

Or one of the following:

400 Bad request.

403 The user is forbidden to perform the operation.

404 The specified resource was not found.

500 A server error occurred.

{

"errors": [

{

"description": "The name of the environment must be provided",

"internalCode": "a1012",

"internalTxId": "103B-A018-3898-0ABF"

}

]

}Astra DB Metrics configuration for Amazon CloudWatch

With a required top-level key of cloudwatch, the POST payload’s required properties are:

-

access_key -

secret_key -

region

Example payload for CloudWatch:

{

"cloudwatch": {

"access_key": "AKIAIOSFODNN7EXAMPLE",

"secret_key": "wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY",

"region": "us-east-1"

}

}You provide your AWS access keys so that AWS can verify your identity in programmatic calls. Your access keys consist of an access key ID (for example, AKIAIOSFODNN7EXAMPLE) and a secret access key (for example, wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY).

In the region property, you can specify the same AWS region used by the Astra DB serverless database from which you’ll export metrics. However, you have the option of specifying a different AWS region; for example, you might use this option if your app is in another region and you want to see the metrics together.

|

Reminder: In AWS, the secret key user must have the |

POST metrics configuration example (Amazon CloudWatch)

|

In the curl examples, notice we added the |

-

cURL command

-

Result

Example POST payload for CloudWatch:

curl --request POST \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include \

--verbose \

--data '{

"cloudwatch": {

"access_key": "AKIAIOSFODNN7EXAMPLE",

"secret_key": "wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY",

"region": "us-east-1"

}

}'202 OK

Or one of the following:

400 Bad request.

401 Unauthorized.

403 The user is forbidden to perform the operation.

404 The specified resource was not found.

409 The request could not be processed because of conflict.

5XX A server error occurred.

Example:

{

"errors": [

{

"description": "The name of the environment must be provided",

"internalCode": "a1012",

"internalTxId": "103B-A018-3898-0ABF"

}

]

}Get metrics configuration examples (CloudWatch)

|

In the curl examples, notice we added the |

Retrieve third-party metrics configuration for an Astra DB database:

-

cURL command

-

Result

curl --request GET \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include \

--verbose200 OK

Example response for Amazon Cloudwatch:

{

"cloudwatch": {

"access_key": "AKIAIOSFODNN7EXAMPLE",

"secret_key": "wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY",

"region": "us-east-1"

}

}

Or one of the following:

400 Bad request.

403 The user is forbidden to perform the operation.

404 The specified resource was not found.

500 A server error occurred.

{

"errors": [

{

"description": "The name of the environment must be provided",

"internalCode": "a1012",

"internalTxId": "103B-A018-3898-0ABF"

}

]

}Astra DB Metrics configuration for Splunk

With a required top-level key of splunk, the POST payload’s required properties are:

-

endpoint -

index -

token

Optional properties:

-

source -

sourcetype

Example payload for Splunk:

{

"splunk": {

"endpoint": "https://http-inputs-YOURCOMPANY.splunkcloud.com",

"index": "astra_third_party_metrics_test",

"token": "splunk-token",

"source": "splunk-source",

"sourcetype": "splunk-sourcetype"

}

}-

For the required

endpoint, provide the full HTTP address and path for the Splunk HTTP Event Collector (HEC) endpoint. If you are unsure of this address, please contact your Splunk Administrator. -

For the required

index, provide the Splunk index to which you want to write metrics. The identified index must be set so the Splunk token has permission to write to it. -

For the required

token, provide the Splunk HEC token for Splunk authentication. -

For the optional

source, provide the source of events sent to this sink. If unset, the API sets it to a default value:astradb. -

For the optional

sourcetype, provide the source type of events sent to this sink. If unset, the API sets it to a default value:astradb-metrics.

POST metrics configuration example (Splunk)

|

In the curl examples, notice we added the |

-

cURL command

-

Result

Example POST payload for Splunk:

curl --request POST \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include \

--verbose \

--data '{

"splunk": {

"endpoint": "https://http-inputs-YOURCOMPANY.splunkcloud.com",

"index": "astra_third_party_metrics_test",

"token": "splunk-token",

"source": "splunk-source",

"sourcetype": "splunk-sourcetype"

}

}'202 OK

Or one of the following:

400 Bad request.

401 Unauthorized.

403 The user is forbidden to perform the operation.

404 The specified resource was not found.

409 The request could not be processed because of conflict.

5XX A server error occurred.

Example:

{

"errors": [

{

"description": "The name of the environment must be provided",

"internalCode": "a1012",

"internalTxId": "999B-A099-9999-0ABF"

}

]

}Get metrics configuration examples (Splunk)

|

In the curl examples, notice we added the |

Retrieve third-party metrics configuration for an Astra DB database:

-

cURL command

-

Result

curl --request GET \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include \

--verbose200 OK

Example response for Splunk:

{

"splunk": {

"endpoint": "https://http-inputs-YOURCOMPANY.splunkcloud.com",

"index": "astra_third_party_metrics_test",

"token": "splunk-token",

"source": "splunk-source",

"sourcetype": "splunk-sourcetype"

}

}

Or one of the following:

400 Bad request.

403 The user is forbidden to perform the operation.

404 The specified resource was not found.

500 A server error occurred.

{

"errors": [

{

"description": "The name of the environment must be provided",

"internalCode": "a1012",

"internalTxId": "999B-A999-9999-0ABF"

}

]

}Astra DB Metrics configuration for Pulsar

With a required top-level key of pulsar, the POST payload’s required properties are:

-

endpoint- Provide the URL of your Pulsar Broker. -

topic- Provide the Pulsar topic to which you’ll publish telemetry. -

auth_name- The authentication name, such as my-auth. -

auth_strategy- Provide the authentication strategy used by your Pulsar broker. The value should betokenoroauth2.-

If the

auth_strategyistoken- Provide the token for Pulsar authentication. -

Or if the

auth_strategyisoauth2- Provide the requiredoauth2_credentials_urlandoauth2_issuer_urlproperties. You may also provide (optionally) theoauth_audienceandoauth2_scope.

-

Example POST payload for Pulsar where auth_strategy is token:

{

"pulsar": {

"endpoint": "pulsar+ssl://pulsar.example.com",

"topic": "my-topic-123",

"auth_strategy": "token",

"token": "AstraTelemetry123",

"auth_name": "my-auth"

}

}Example POST payload for Pulsar where auth_strategy is oauth2:

{

"pulsar": {

"endpoint": "pulsar+ssl://pulsar.example.com",

"topic": "my-topic-123",

"auth_strategy": "oauth2",

"oauth2_credentials_url": "https://<credentials URL for OAuth2>.com",

"oauth2_issuer_url": "https://astra-oauth2.example.com"

}

}POST metrics configuration example (Pulsar)

|

In the curl examples, notice we added the |

-

cURL command

-

Result

Example POST payload for Pulsar where auth_strategy is token:

curl --request POST \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include \

--verbose \

--data '{

"pulsar": {

"endpoint": "pulsar+ssl://pulsar.example.com",

"topic": "my-topic-123",

"auth_strategy": "token",

"token": "AstraTelemetry123",

"auth_name": "my-auth"

}

}'

Example POST payload for Pulsar where auth_strategy is oauth2:

curl --request POST \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include \

--verbose \

--data '{

"pulsar": {

"endpoint": "pulsar+ssl://pulsar.example.com",

"topic": "my-topic-123",

"auth_strategy": "oauth2",

"oauth2_credentials_url": "https://<credentials URL for OAuth2>.com",

"oauth2_issuer_url": "https://astra-oauth2.example.com"

}

}'202 OK

Or one of the following:

400 Bad request.

401 Unauthorized.

403 The user is forbidden to perform the operation.

404 The specified resource was not found.

409 The request could not be processed because of conflict.

5XX A server error occurred.

Example:

{

"errors": [

{

"description": "The name of the environment must be provided",

"internalCode": "a1012",

"internalTxId": "103B-A018-3898-0ABF"

}

]

}Get metrics configuration examples (Pulsar)

|

In the curl examples, notice we added the |

Retrieve third-party metrics configuration for an Astra DB database:

-

cURL command

-

Result

curl --request GET \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include \

--verbose200 OK

Example response for Pulsar where `auth_strategy` is `token`:

{

"pulsar": {

"endpoint": "pulsar+ssl://pulsar.example.com",

"topic": "my-topic-123",

"auth_strategy": "token",

"token": "AstraTelemetry123",

"auth_name": "my-auth"

}

}

Example response for Pulsar where `auth_strategy` is `oauth2`:

{

"pulsar": {

"endpoint": "pulsar+ssl://pulsar.example.com",

"topic": "my-topic-123",

"auth_strategy": "oauth2",

"oauth2_credentials_url": "https://<credentials URL for OAuth2>.com",

"oauth2_issuer_url": "https://astra-oauth2.example.com"

}

}

Or one of the following:

400 Bad request.

403 The user is forbidden to perform the operation.

404 The specified resource was not found.

500 A server error occurred.

{

"errors": [

{

"description": "The name of the environment must be provided",

"internalCode": "a1012",

"internalTxId": "103B-A018-3898-0ABF"

}

]

}Astra DB Metrics configuration for Datadog

With a top-level key of Datadog, the POST payload’s required properties are:

-

api_key -

site

Payload format for Datadog:

{

"Datadog": {

"api_key": "<your-api-key-to-authenticate-to-datadog-api>",

"site": "<datadog-site-you-are-using>"

}

}Notes:

-

api_key: The required API key so that your Astra DB metrics export operation can successfully authenticate into the Datadog API. For details, see the Authentication topic in the Datadog documentation.Before submitting a DevOps call with the

api_keyvalue, you should validate that it’s correct by using the Validate API keycurlcommand that’s described in the Datadog documentation. -

site: The Datadog site to which the exported Astra DB health metrics will be sent. For details, including the correct format to specify in the DevOps call, see this Getting Started with Datadog Sites topic in the Datadog documentation.Datadog sites are named in different ways. See the Datadog documentation for important details. Summary:

-

If you’ll send Astra DB health metrics to a Datadog site prefixed with "app", remove both the "https://" protocol and the "app" prefix from the

siteparameter that you specify in the DevOps call. -

If you’ll send Astra DB health metrics to a Datadog site that is prefixed with a subdomain such as "us5", remove only the "https://" protocol from the

siteparameter that you specify in the DevOps call. -

Other Datadog site parameters are possible. See the table in the Datadog documentation for guidance on the appropriate

siteparameter format.

-

POST metrics configuration example (Datadog)

|

In the curl examples, notice we added the |

-

cURL command

-

Result

Example POST payload for Datadog:

curl --request POST \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999ef64ecec800e97d72669e4cEXAMPLE9' \

--include \

--verbose \

--data '{

"Datadog": {

"api_key": "<your-api-key-to-authenticate-to-datadog-api>",

"site": "<datadog-site-you-are-using>"

}

}'202 OK

Or one of the following:

400 Bad request.

401 Unauthorized.

403 The user is forbidden to perform the operation.

404 The specified resource was not found.

409 The request could not be processed because of conflict.

5XX A server error occurred.

Example:

{

"errors": [

{

"description": "The name of the environment must be provided",

"internalCode": "a1012",

"internalTxId": "103B-A018-3898-0ABF"

}

]

}Get metrics configuration examples (Datadog)

|

In the curl examples, notice we added the |

Retrieve third-party metrics configuration for an Astra DB database:

-

cURL command

-

Result

curl --request GET \

--url 'https://api.astra.datastax.com/v2/databases/$DB_ID/telemetry/metrics' \

--header 'Accept: application/json' \

--header 'Authorization: Bearer AstraCS:FAKETOKENVALUE:1b152ed223d3a6e4e61a999999999EXAMPLE9' \

--include \

--verbose200 OK

Example response for Datadog:

{

"datadog": {

"api_key": "<your-api-key-to-authenticate-to-datadog-api>",

"site": "<datadog-site-you-are-using>"

}

}

Or one of the following:

400 Bad request.

403 The user is forbidden to perform the operation.

404 The specified resource was not found.

500 A server error occurred.

{

"errors": [

{

"description": "The name of the environment must be provided",

"internalCode": "a1012",

"internalTxId": "103B-A018-3898-0ABF"

}

]

}Visualize exported Astra DB metrics with Grafana Cloud

This section explains how to configure Grafana Cloud to consume Astra DB (serverless) health metrics.

|

Using Grafana Cloud is optional. You can choose your favorite tool to visualize the Astra DB metrics that you exported to Kafka, Prometheus, Amazon CloudWatch, Splunk, Pulsar, or Datadog. |

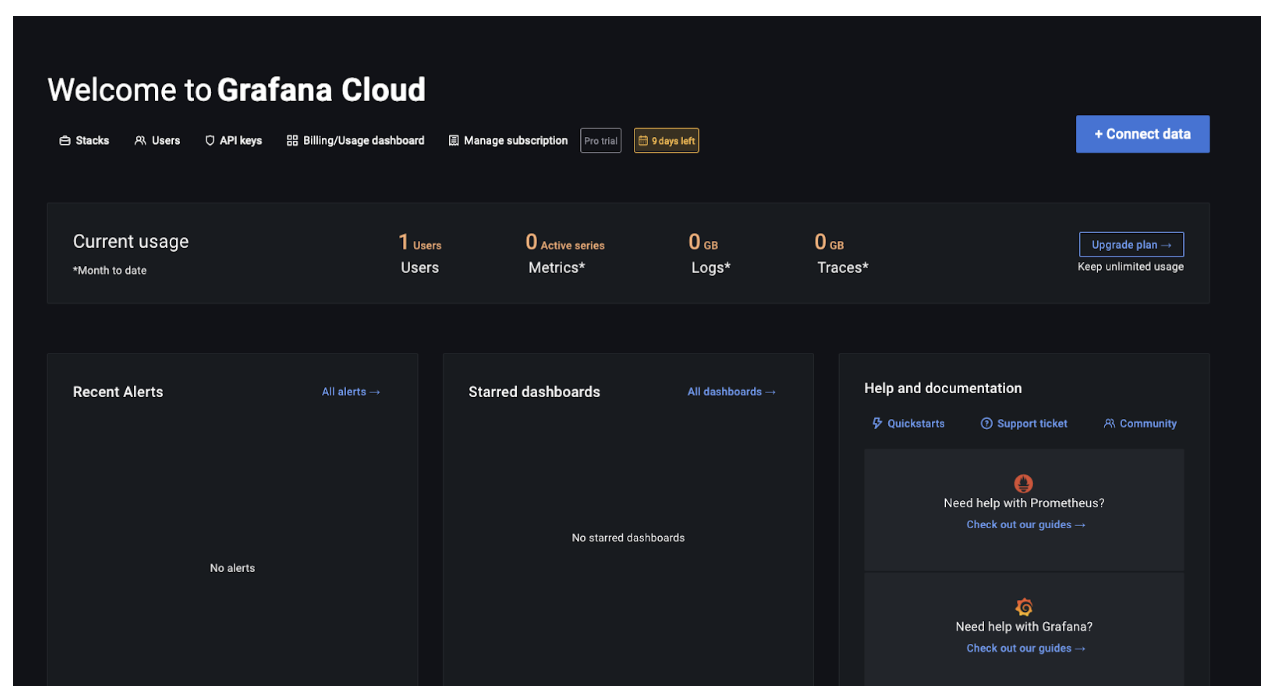

We’ll use Prometheus as the destination system in the examples. You’ll need a Grafana Cloud account. They offer a Free plan with 14-day retention. See Grafana pricing.

Initial steps in Grafana Cloud

The following initial steps occur before submitting the POST /v2/telemetry/metrics payload described previously in this topic.

-

On login to Grafana Cloud, select + Connect data from the home page.

-

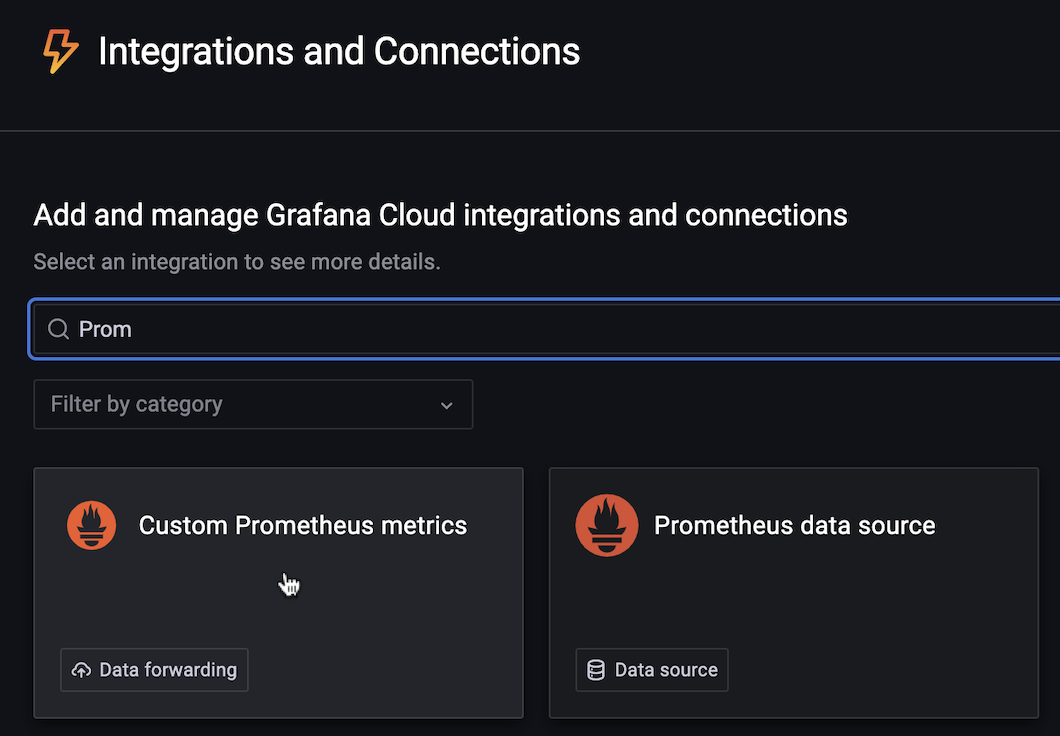

Select the Custom Prometheus metrics section that includes the Prometheus icon.

-

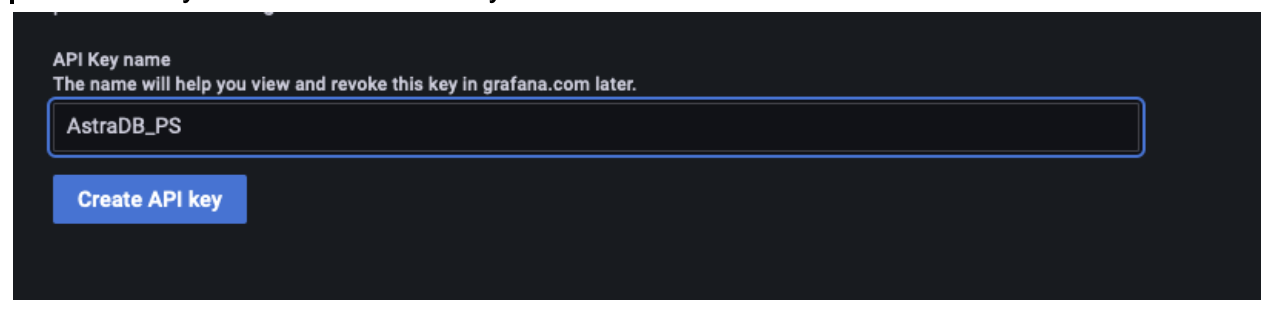

You can accept the default selections, or make edits as needed. Provide a name to the API Key (such as AstraDB_PS) and click Create API Key.

-

The config file is generated. Here’s an example - your values will be different:

cat << EOF > ./agent-config.yaml global: scrape_interval: 60s scrape_configs: - job_name: node static_configs: - targets: ['localhost:9100'] remote_write: - url: https://prometheus-prod-10-prod-us-central-0.grafana.net/api/prom/push basic_auth: username: 412XXX password: eyJrIjoiMmE1ZTY4YWRhY2ZmNmZlMjllZmY3ZjczYWQ0NzRiZjNlNTE1NTVkMCIsIm4iOiJBc3RyYURCX1BTIiwiaWQiOjYzOTQXXX= EOF

DevOps config via Postman & Grafana Cloud followup

To configure and publish metrics from Astra DB using the DevOps API, follow these steps. We’ll use Postman and have a bearer token configured.

To publish metrics, create a POST request in Postman:

https://api.astra.datastax.com/v2/databases/{databaseId}/telemetry/metrics

In the Body, set the parameters to the values that you retrieved from Grafana Cloud. Example:

{

"prometheus_remote": {

"endpoint": "https://prometheus-prod-10-prod-us-central-0.grafana.net/api/prom/push",

"auth_strategy": "basic",

"user": "412XXX",

"password": "eyJrIjoiMmE1ZTY4YWRhY2ZmNmZlMjllZmY3ZjczYWQ0NzRiZjNlNTE1NTVkMCIsIm4iOiJBc3RyYURCX1BTIiwiaWQiOjYzOTQXXX=

}

}The POST response should return a 202 on success.

Now, switch back to Grafana Cloud:

-

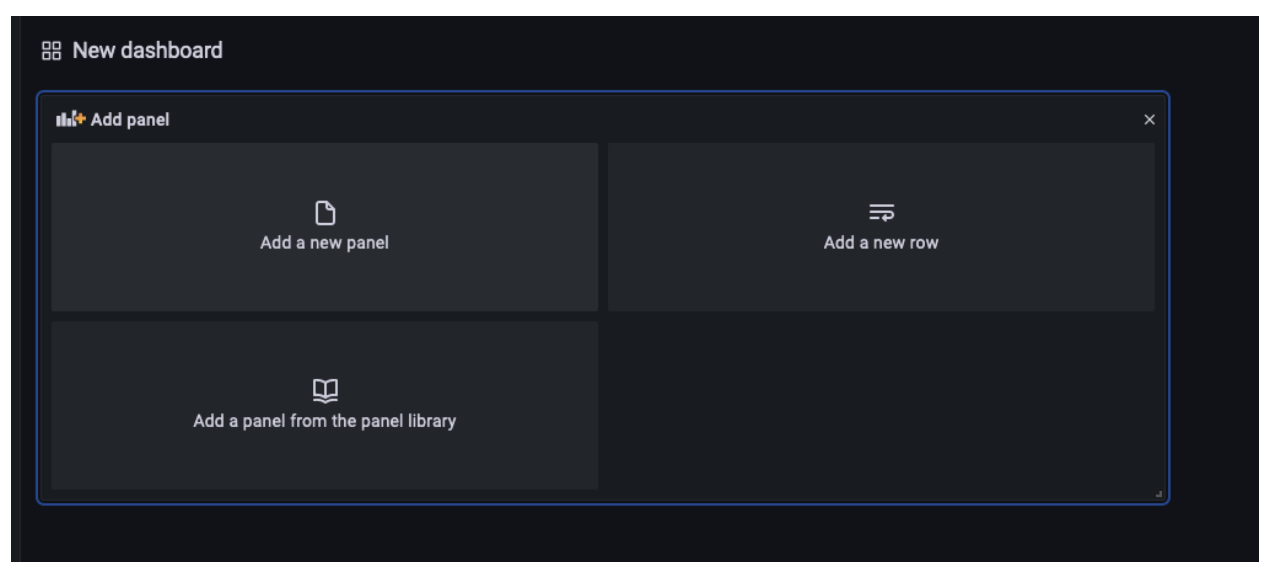

Select the option to Create a New Dashboard.

-

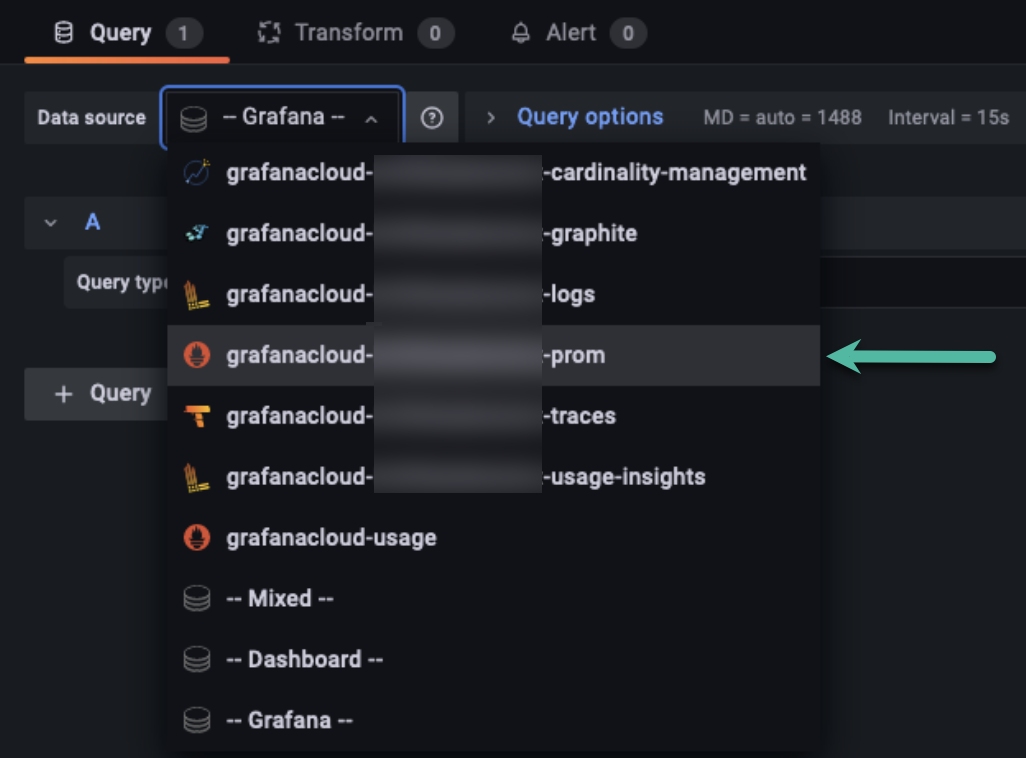

Select Add a new panel and select the Data Source as

grafanacloud-<YourUserId>-prom. Example:

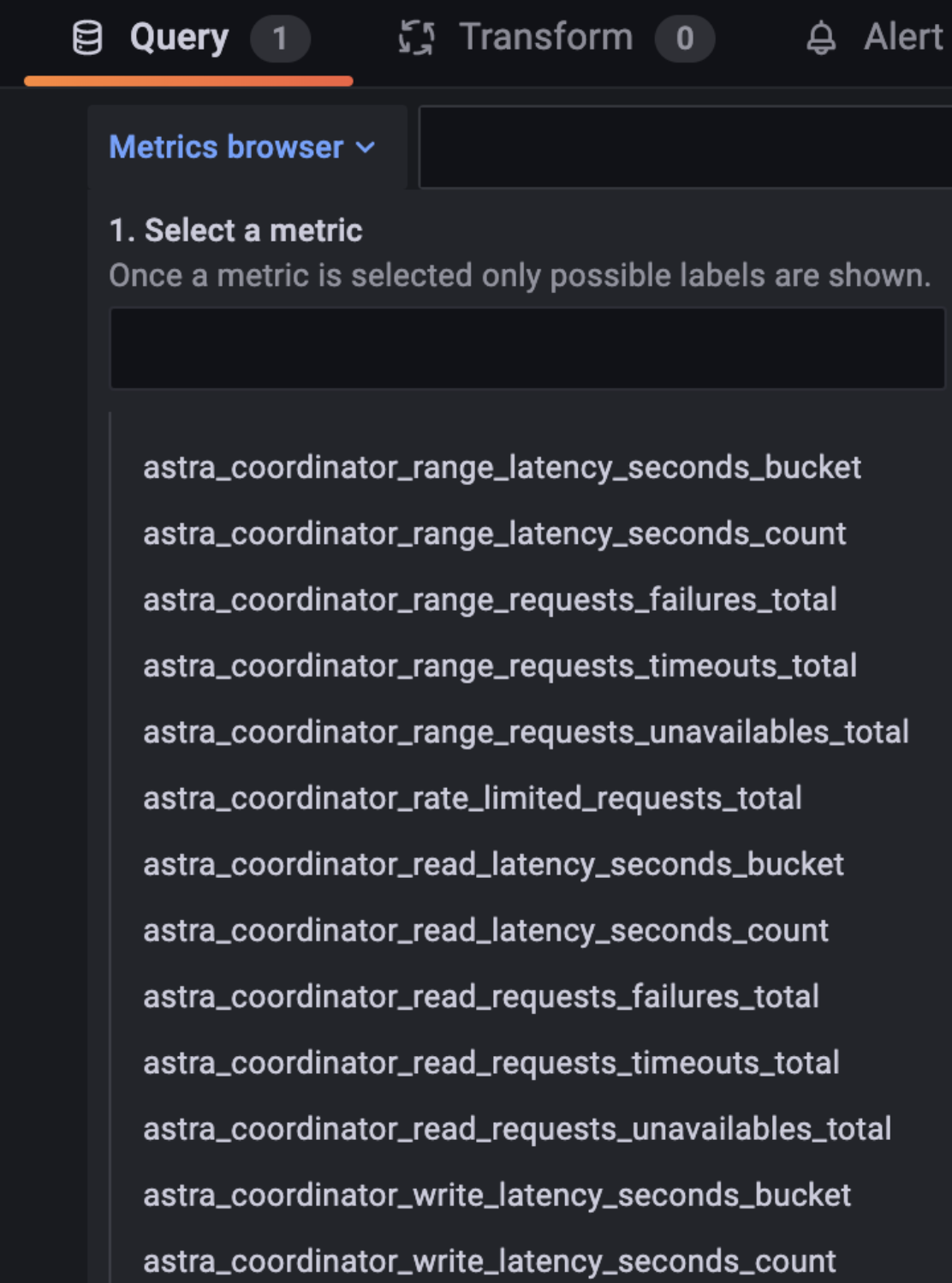

-

If configured correctly, you should see the Astra DB Metrics under the Metrics Browser in Grafana Cloud. Example:

-

Now you can select the metrics that you want to visualize in Grafana Cloud. The Dashboard panel displays the charts.

Alternative approach: import from Astra DB Health to Grafana Cloud

|

This alternative approach will explore an import option from Astra DB health to your Grafana Cloud instance. You will still need to complete the steps listed above:

Then continue with the steps below. |

-

Login to Astra Portal.

-

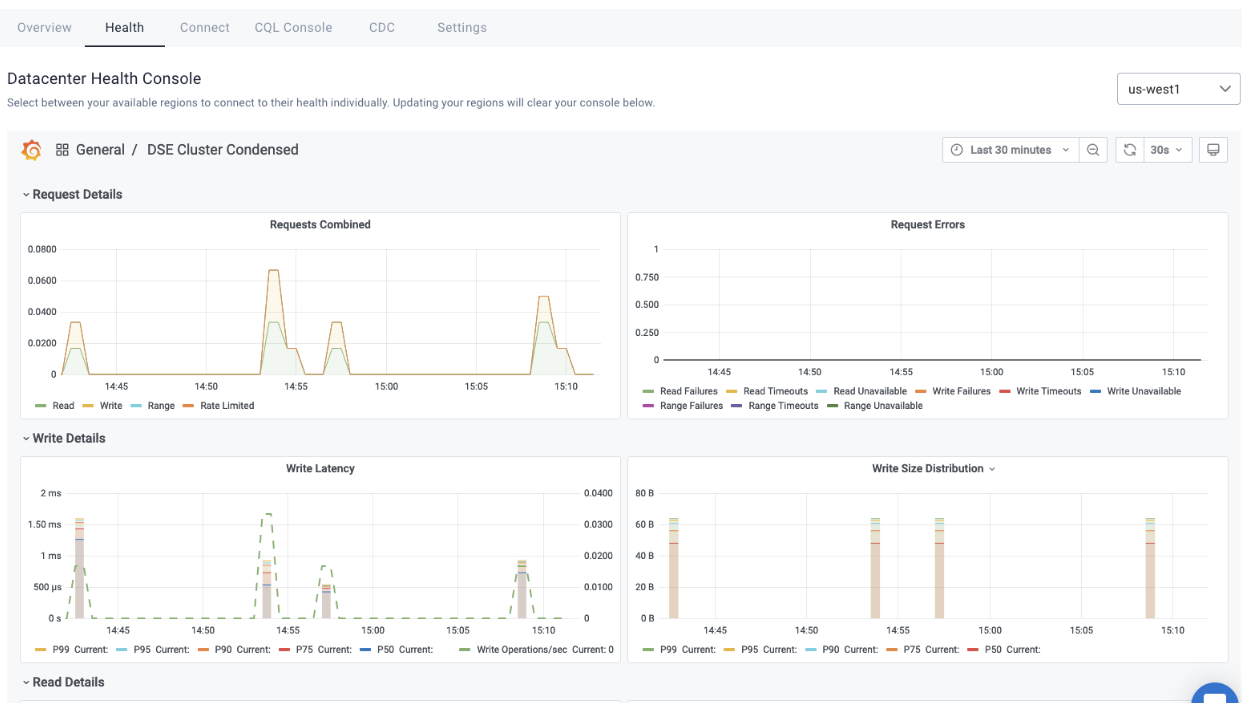

Select the database you want to ultimately monitor in Grafana Cloud by first navigating to your database’s Health tab.

-

Click on DSE Cluster Condensed. Example:

-

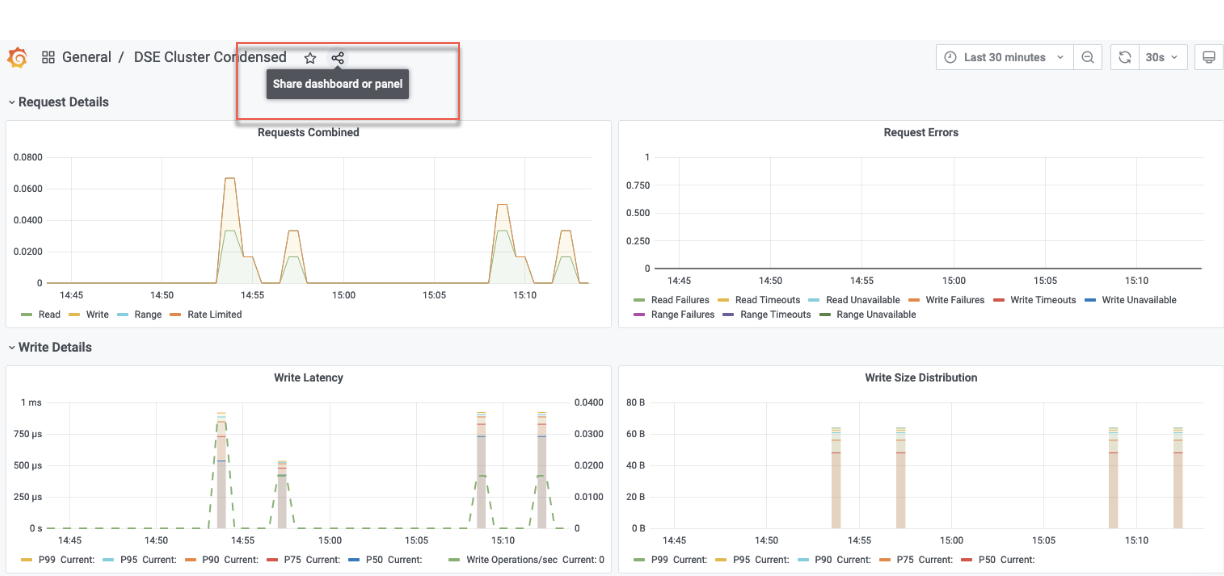

Click the Share icon:

-

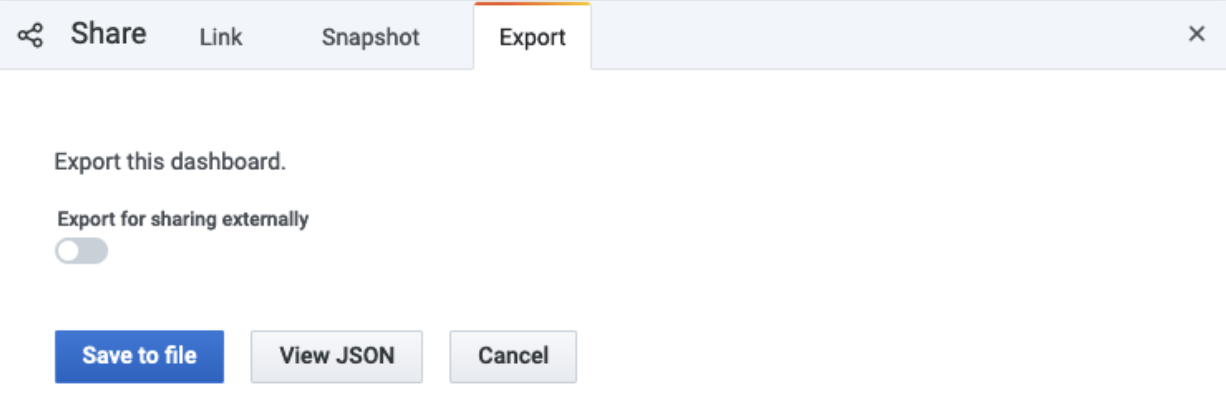

Then select the Export tab:

-

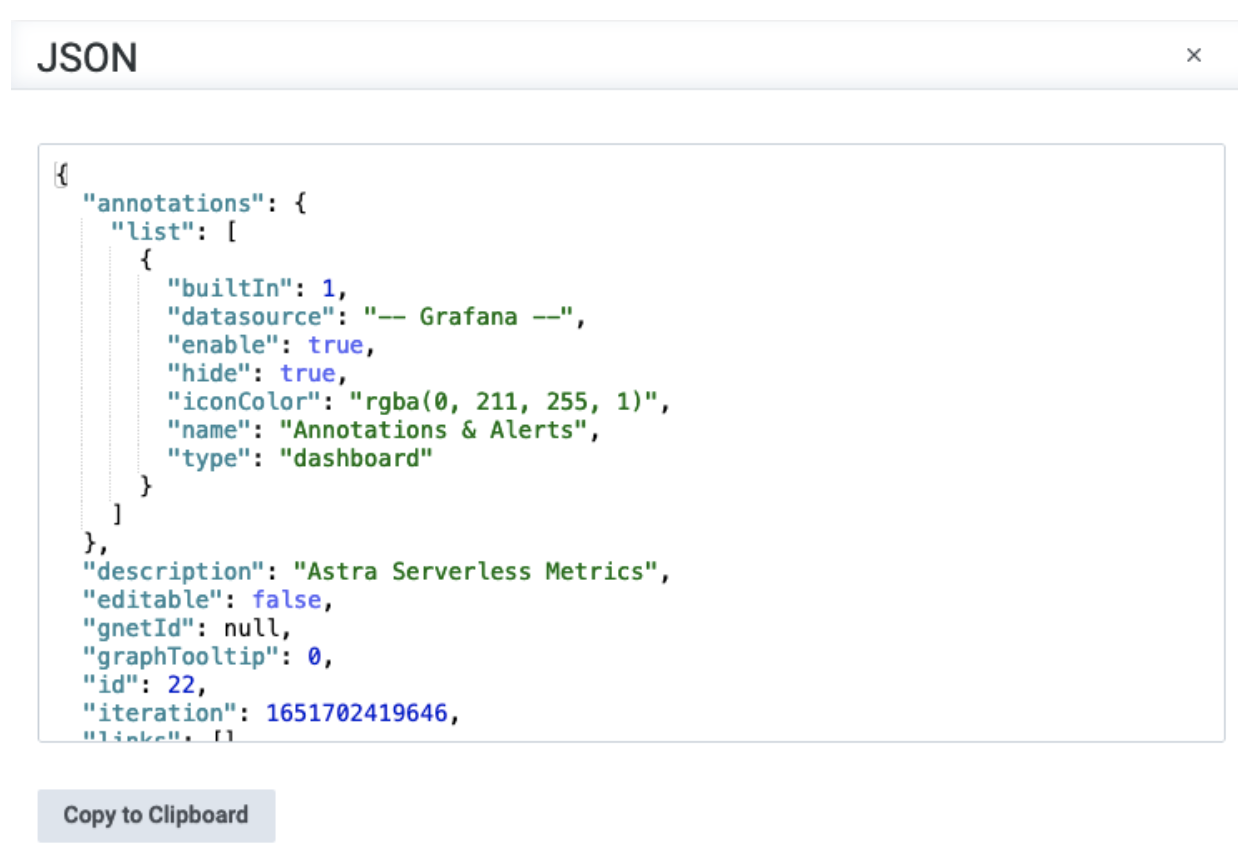

Click View JSON and then Copy to Clipboard:

-

Make the following edits to the copied JSON.

Replace all references to

coordinator_…{tenant= … }withastra_coordinatorand remove the tenant references. For example, in the following expression:"expr": "histogram_quantile(.99, sum(rate(coordinator_write_requests_mutation_size_bytes_bucket{tenant='${__user.login}'}[$__rate_interval])) by (le))",You would replace that expression with:

"expr": "histogram_quantile(.99, sum(rate(astra_coordinator_write_requests_mutation_size_bytes_bucket{}[$__rate_interval])) by (le))", -

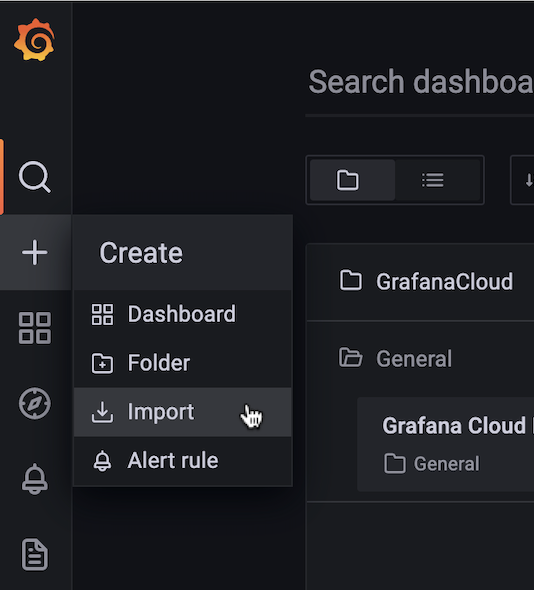

Now switch over to your Grafana Cloud instance. Click the Create option, and then click Import from the menu.

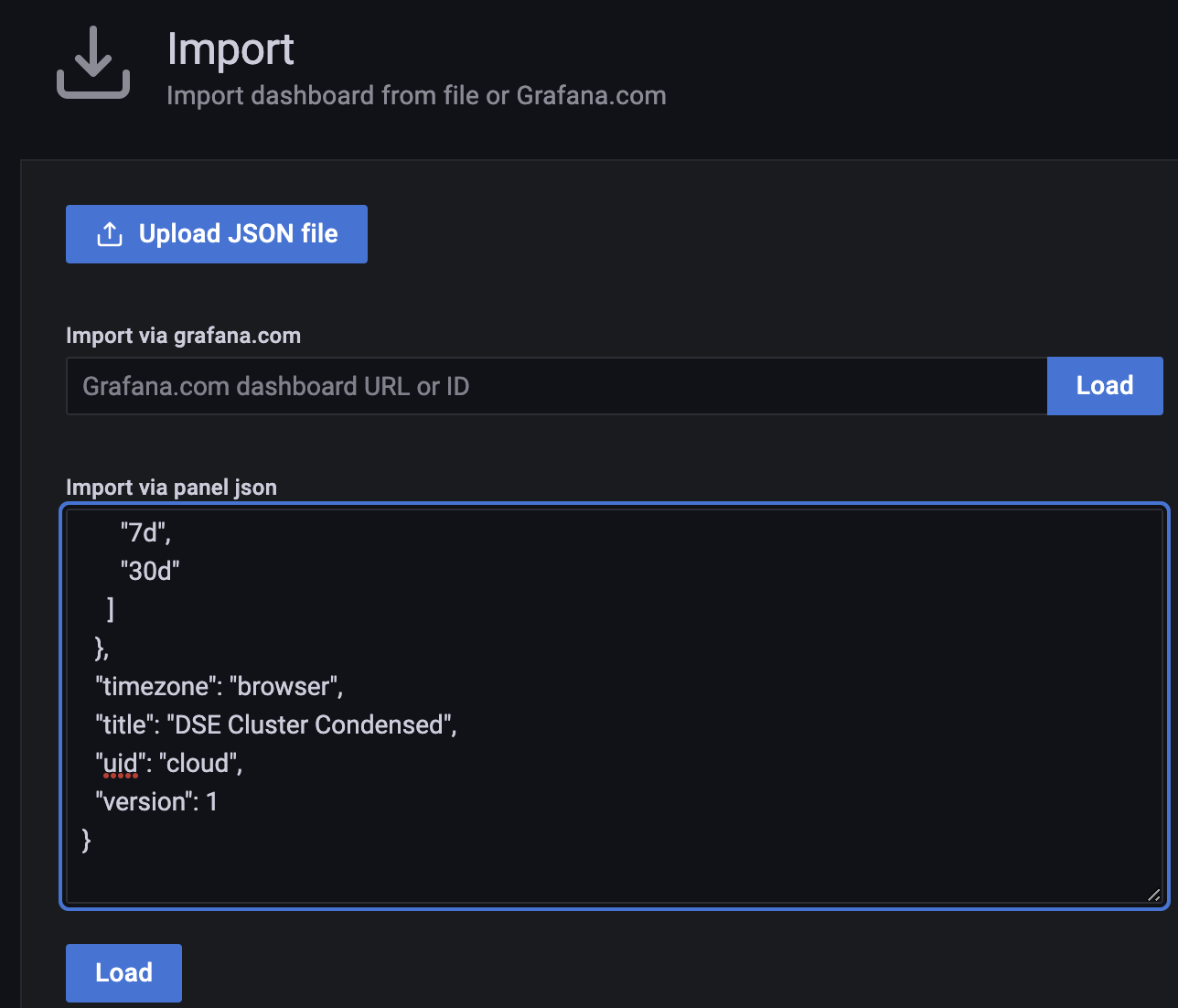

-

Upload or paste in your edited JSON.

-

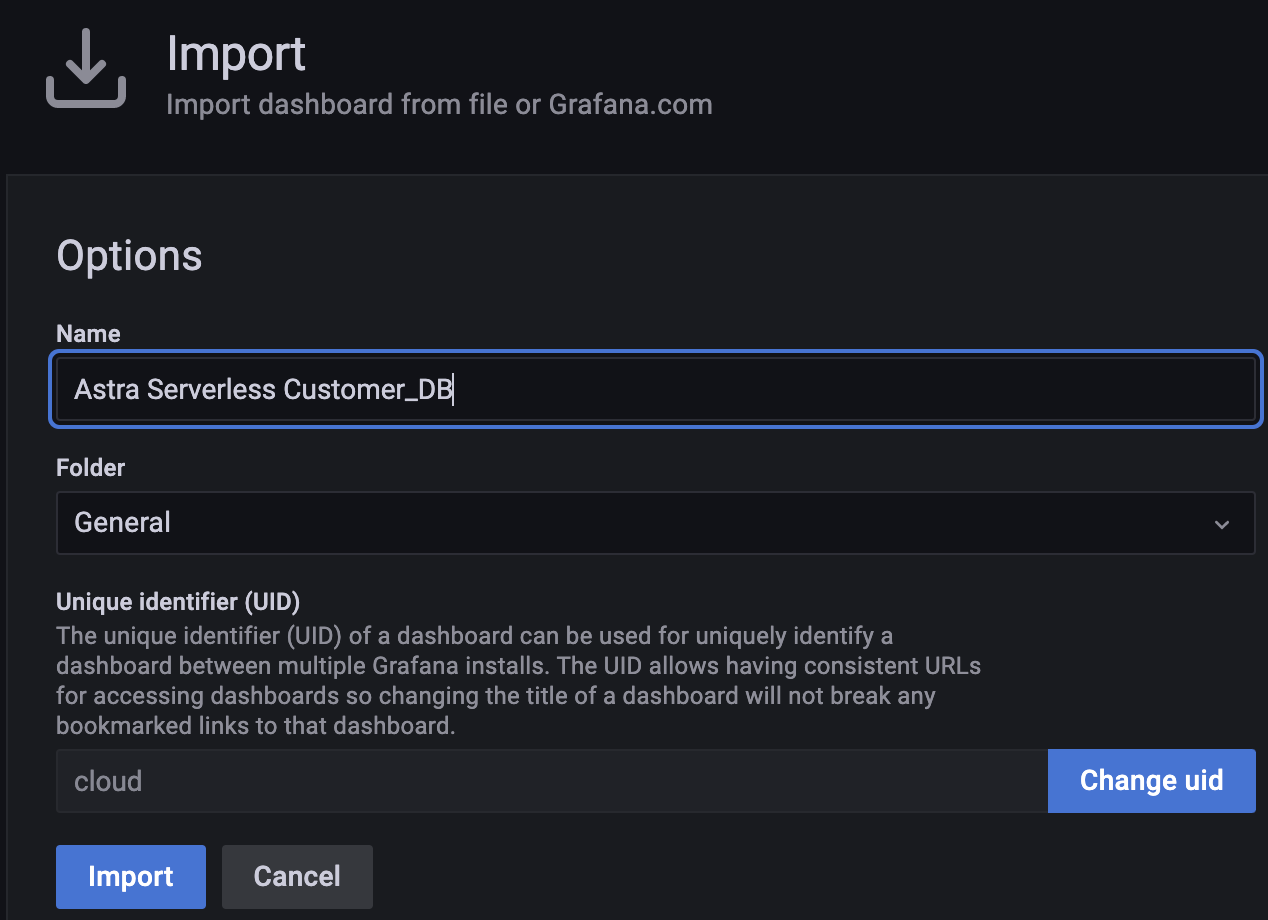

You can change the name. Example:

-

Once imported, all your Astra DB health charts will auto-populate in Grafana Cloud. Example:

Now you can use your own Grafana Cloud instance to monitor the Astra DB database’s health via its metrics.

What’s next?

See the following related topics.

DevOps v2 API reference

In addition to this topic, see: